Deciding whether to keep HDR on or off can be confusing. High Dynamic Range promises better visuals, but is it always the best choice?

This guide will help you understand HDR and make the right decision for your setup.

What Exactly is HDR (High Dynamic Range)?

HDR stands for High Dynamic Range. It refers to the difference between the brightest whites and the darkest blacks an image can display.

Think of it as expanding the range of colors and contrast compared to standard dynamic range (SDR).This allows for more detail in both very bright and very dark areas of a picture simultaneously.

The goal is to create a more realistic and lifelike video.

It’s worth noting that HDR is also a popular technique in photography. In photography, HDR typically involves capturing multiple images of the same scene at different exposure settings (bracketing).

These separate exposures are then computationally merged into a single image that retains detail in both the very bright and very dark areas, which might be lost in a single standard exposure.

While related to managing high dynamic range, this photographic capture process is distinct from the HDR display technology primarily discussed in this article.

Benefits of HDR

The primary benefit of HDR is enhanced picture quality.

Here are some specific advantages:

- Brighter Highlights: Specular highlights, like reflections or the sun, appear much brighter and more impactful without clipping detail.

- Deeper Blacks: Dark areas reveal more subtle detail instead of just being crushed into blackness.

- Wider Color Gamut: HDR often comes paired with technologies that display a broader range of colors, making images more vibrant and true-to-life.

- Increased Contrast Ratio: The expanded difference between the darkest and brightest points creates a more dynamic and dimensional image.

Overall, HDR aims for a visual experience closer to what the human eye sees in the real world.

Potential Pitfalls: Why You Might Turn HDR Off

Despite the benefits, HDR isn’t always perfect. Sometimes, enabling HDR can lead to unexpected visual issues.

Poor HDR implementation on some displays can result in a dim or washed-out picture. Inaccurate color mapping might make scenes look unnatural.

If the source content isn’t properly mastered for HDR, the results can be worse than standard SDR.

Some older or budget displays might claim HDR support but lack the necessary brightness or contrast capabilities, leading to a subpar experience.

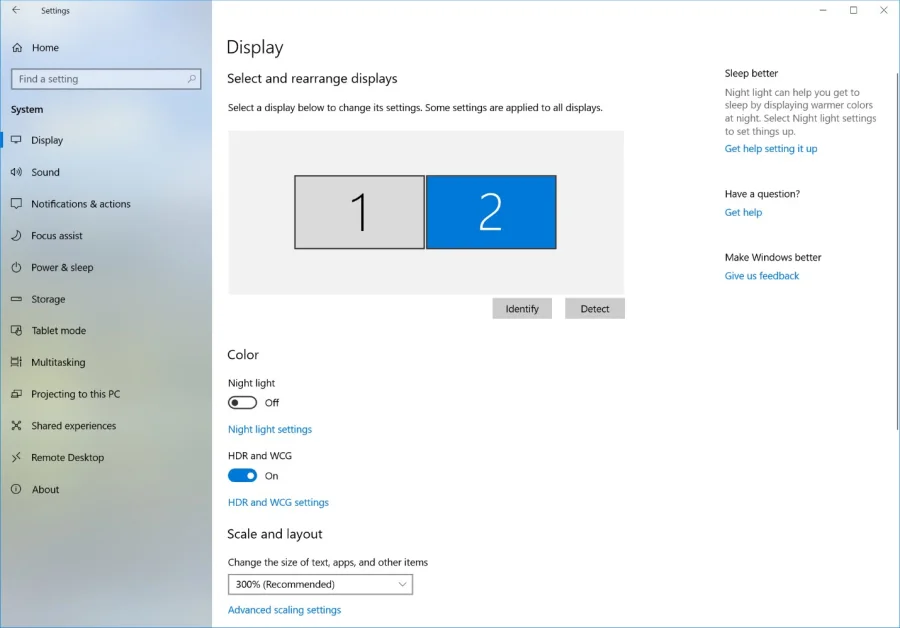

Operating systems like Windows can sometimes struggle with HDR implementation, making SDR content look odd when HDR is globally enabled.

Checking Your Gear: Is Your Setup Truly HDR Capable?

True HDR requires a compatible ecosystem. Your display (TV or monitor) must explicitly support an HDR standard (like HDR10, Dolby Vision, HLG, or HDR10+).

Look for specifications regarding peak brightness (measured in nits) and contrast ratio; higher numbers are generally better for HDR.

Your source device (Blu-ray player, game console, streaming stick, PC graphics card) must also support HDR output.

The cable connecting them needs sufficient bandwidth, typically an HDMI 2.0a/b or HDMI 2.1 cable, or DisplayPort 1.4 or higher.

Ensure all relevant settings on both the display and source device are configured correctly for HDR.

The Importance of Source Material

Having HDR hardware is only half the battle. The content you are viewing must also be mastered in HDR.

Playing Standard Dynamic Range (SDR) content on an HDR display with HDR mode enabled can sometimes lead to issues.

The display or operating system tries to “upscale” the SDR signal to fit the HDR container, which doesn’t always work well.

Native HDR content (like 4K Blu-rays, certain streaming shows/movies, modern video games) is designed to take full advantage of HDR capabilities.

Mixing HDR and SDR content (e.g., watching an SDR video while your OS is in HDR mode) can be problematic on some systems.

When to Use HDR

You should generally aim to use HDR when all conditions are met. Use HDR when viewing native HDR content (movies, shows, games specifically labelled as HDR, Dolby Vision, etc.).

Enable HDR when playing modern video games designed with HDR support on a compatible console or PC.

Turn on HDR if your display handles it well and accurately enhances the picture quality without introducing noticeable flaws.

It’s often beneficial when watching content specifically graded for high brightness and contrast, like nature documentaries or visually rich films.

Specifically for photography, activating your camera or phone’s HDR capture mode is beneficial for static scenes with challenging high contrast, such as landscapes featuring both bright sky and deep shadows.

When Disabling HDR Makes Sense

Sometimes, turning HDR off provides a better experience. Disable HDR if your display’s implementation is poor, making the picture look dim, washed out, or inaccurate.

Turn off HDR if you are primarily viewing SDR content and your system doesn’t handle the conversion well (e.g., SDR content looks wrong when Windows HDR is enabled).

Consider disabling HDR if you notice input lag increases significantly during gaming on certain displays (though this is less common on modern TVs/monitors).

If you find the HDR effect distracting or prefer the look of SDR for certain content, turning it off is a valid personal preference.

For productivity tasks on a computer, where color accuracy for non-HDR workflows is paramount, keeping HDR off might be simpler unless specifically needed.

Regarding photography capture, turn off your device’s auto-HDR mode if shooting fast-moving subjects (to prevent ghosting from merging multiple shots) or if you intentionally want the subject to appear as a dark shape against a bright background (a silhouette effect) instead of capturing details in both bright and dark areas (a balanced exposure).

How to Toggle HDR On Your Devices (TV, Monitor, OS)

The method for enabling or disabling HDR varies by device.

On most TVs, you’ll find HDR settings within the Picture or Display settings menu. Look for options like “HDMI Deep Color,” “Input Signal Plus,” “HDMI Enhanced Format,” or specific HDR picture modes.

On PC monitors, HDR settings might be in the monitor’s On-Screen Display (OSD) menu, often under Picture or Color settings.

In Windows 10/11, right-click the desktop, select “Display settings,” and look for the “HDR” toggle (you might need to select the specific display first).

On macOS (compatible models), go to System Preferences/Settings > Displays and look for the “High Dynamic Range” checkbox.

Game consoles (PlayStation, Xbox) usually have HDR settings under their Video Output or Display settings menus, often auto-detecting compatible TVs.

The Final Verdict

Ultimately, the decision to use HDR on or off depends on your specific setup and content. When HDR works correctly with compatible gear and native content, it offers a superior visual experience.

However, if your hardware struggles, the content isn’t HDR, or the implementation causes issues, sticking with SDR might be better.

Experiment with the settings on your devices. Trust your eyes; choose the setting that looks best to you for the specific content you are viewing.

There is no single “always on” or “always off” answer that fits every situation.

Q&A

Do you use HDR for gaming?

HDR is increasingly popular for gaming as it can dramatically enhance visual immersion. When supported by the game and displayed on a capable monitor or TV, HDR allows for brighter highlights, deeper blacks, and a wider range of colors. This can reveal more detail in both dark shadows and bright areas, making game worlds feel more realistic and dynamic. However, proper calibration is key for the best experience.

Does HDR make quality better?

HDR (High Dynamic Range) has the potential to make visual quality significantly better by expanding the range of contrast and color compared to standard SDR. This means brighter whites, darker blacks, and more nuances in between, leading to a more lifelike and detailed image. However, the improvement is entirely dependent on having HDR-compatible content and a display capable of rendering HDR effectively. Poor HDR implementation can sometimes look worse than well-mastered SDR.

Which is better, HDR or non-HDR?

Technically, HDR offers a superior potential viewing experience compared to non-HDR (SDR) because it supports a wider spectrum of colors and luminance levels. This allows for greater detail, depth, and realism in the image. However, the actual result depends heavily on the quality of the display and how the content was mastered. A high-quality SDR image can still look better than a poorly implemented HDR image on a low-quality screen. For compatible content and capable hardware, HDR is generally considered better.

Read Next